Hello everyone!! This is my first blog post on this site, so I’m both excited and scared about it. That’s why, before starting, I want to say sorry… and I promise I’ll improve in the following months.

I started in AWS in September 2020, and almost every week since then I discuss a common topic with our customers: “How can I manage at scale my AWS Networking environment?“. Let me first start defining this “at scale” concept: think about 5 AWS Regions, with its AWS Transit Gateways in each Region, and a dynamic environment where Amazon VPCs are created by different teams anytime they are building their own solutions. The AWS management console is very useful to understand how the different building blocks integrate between each other, for troubleshooting, and to have a nice view of the environment created. However, the creation and management of resources can be challenging.

We know it, and that’s why the use of Infrastructure as Code (IaC) is part of the AWS best practices. You can define your AWS resources and its integration using code, providing you several benefits:

- It helps with the accountability of your network, making easy the control of what you are building, and where (in which AWS Region or Account).

- You can improve your security strategy, as now you can enforce control of the code (to make sure specific security mechanisms are in place) and also include CI/CD pipelines to automate checks in pre-production environments.

- More speed and lower human risk when building new resources – of course, you have to create your controls beforehand.

Which IaC framework should you use? Whatever you want! AWS CloudFormation or AWS CDK are the native AWS solutions, however in this post we are going to cover Terraform. Why? Because the solution we are discussing today is very specific to Terraform.

When working with Terraform, you can use loops and Terraform modules (which we’ll cover in future posts) to simplify the creation of VPCs and routing in your Transit Gateways. However, since re:Invent 2020, AWS provides a service that simplifies the creation of AWS global networks natively. AWS Cloud WAN provides a central dashboard to create and manage your connections between VPCs and on-premises locations by defining your network using a policy. You tell Cloud WAN how you want to connect everything, and all the resources and routing are configured transparently for you.

How can I configure AWS Cloud WAN using Terraform?

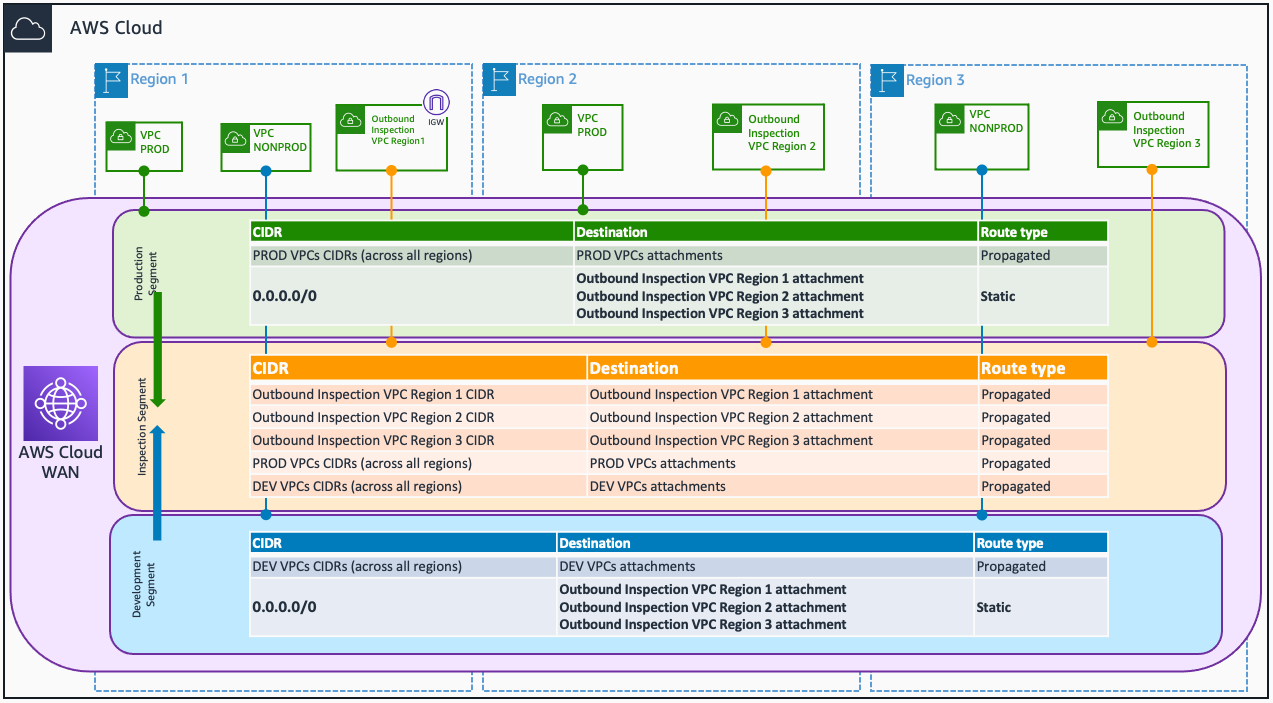

Let’s start with a “simple” architecture to demonstrate how you can build AWS Cloud WAN using Terraform. This post assumes you are already familiar with the basic concepts of the service, if not please check the documentation. Our initial architecture is the following one:

- 3 AWS Regions.

- 2 routing domains: production (prod) and non-production (nonprod).

- Inspection of the egress traffic.

The policy definition is done using a JSON file, however for Terraform we developed a data source – aws_networkmanager_core_network_policy_document. I personally like to use the data source over plain JSON format, as it feels more clear the definition of blocks (segments, routing, or attachment policies). In addition, as I know myself, I also make sure I don’t make any formatting errors in the JSON document (as the data source transforms my definition in JSON to be used by the Core Network).

Having a look at the Core Network policy document parameters section of the documentation, we can translate the design above into the following:

data "aws_networkmanager_core_network_policy_document" "core_nw_policy" {

core_network_configuration {

vpn_ecmp_support = false

asn_ranges = ["64520-65525"]

edge_locations { location = "eu-west-1" }

edge_locations { location = "us-east-1" }

edge_locations { location = "ap-southeast-2" }

}

segments {

name = "prod"

require_attachment_acceptance = false

isolate_attachments = false

}

segments {

name = "nonprod"

require_attachment_acceptance = false

isolate_attachments = false

}

segments {

name = "inspection"

require_attachment_acceptance = false

isolate_attachments = false

}

attachment_policies {

rule_number = 100

condition_logic = "or"

conditions {

type = "tag-exists"

key = "prod"

}

action {

association_method = "constant"

segment = "prod"

}

}

attachment_policies {

rule_number = 200

condition_logic = "or"

conditions {

type = "tag-exists"

key = "nonprod"

}

action {

association_method = "constant"

segment = "nonprod"

}

}

attachment_policies {

rule_number = 300

condition_logic = "or"

conditions {

type = "tag-exists"

key = "inspection"

}

action {

association_method = "constant"

segment = "inspection"

}

}

segment_actions {

action = "create-route"

segment = "prod"

destination_cidr_blocks = [

"0.0.0.0/0"

]

destinations = [

module.ireland_inspection_vpc.core_network_attachment.id,

module.nvirginia_inspection_vpc.core_network_attachment.id,

module.sydney_inspection_vpc.core_network_attachment.id

]

}

segment_actions {

action = "create-route"

segment = "nonprod"

destination_cidr_blocks = [

"0.0.0.0/0"

]

destinations = [

module.ireland_inspection_vpc.core_network_attachment.id,

module.nvirginia_inspection_vpc.core_network_attachment.id,

module.sydney_inspection_vpc.core_network_attachment.id

]

}

segment_actions {

action = "share"

mode = "attachment-route"

segment = "prod"

share_with = ["inspection"]

}

segment_actions {

action = "share"

mode = "attachment-route"

segment = "nonprod"

share_with = ["inspection"]

}

}Even though the policy format is out of the scope of this post (as I don’t want to write a book today), let’s quickly review the different blocks:

edge_locationsdefines the number of AWS Regions where AWS Cloud WAN should be created.segmentsdefines the routing domains to create (and in which AWS Regions you want to create them). In this example, we have the production and non-production routing domains. An extra segment is created for the Inspection VPCs.attachment_policiesdefines one of the nicest features of Cloud WAN. You can automate the attachments’ association to a specific segment by using tags. And once the attachment is associated to a segment, all the routing definition in place will apply.segment_actionsdefines either the route sharing between segments (propagation) or the creation of static routes.

In only 110 lines of code we have defined our global network. And if we want to expand it to new AWS Regions, or add new segments, the only thing to do is to add new blocks. Quite nice to be honest, however… this can be improved. Let’s optimize this to the point of having a policy document that it is updated dynamically when we update our network definition with variables or local variables.

Optimizing our Core Network policy – part I: attachment-policies

The first policy optimization is not tied to the use of Terraform itself, rather it’s related to the Core Network policy directly. Checking the attachment-policies section in the policy document parameters documentation, you will see that one of the actions is called tag-value-of-key. This action will check the value of a specific tag key, and if there’s a segment with the same name, it creates the association.

Checking the policy definition above, we are already doing that: we check that a tag is created with a specific key – value pair, and we associate to a segment with the same name as the tag value. The problem is that we are creating a block of attachment-policies anytime we have a new segment, and this is not scalable. Let’s use tag-value-of-key to allow attachment association for all the current and future segments.

Our updated policy document is the following one:

data "aws_networkmanager_core_network_policy_document" "core_nw_policy" {

core_network_configuration {

vpn_ecmp_support = false

asn_ranges = ["64520-65525"]

edge_locations { location = "eu-west-1" }

edge_locations { location = "us-east-1" }

edge_locations { location = "ap-southeast-2" }

}

segments {

name = "prod"

require_attachment_acceptance = false

isolate_attachments = false

}

segments {

name = "nonprod"

require_attachment_acceptance = false

isolate_attachments = false

}

segments {

name = "inspection"

require_attachment_acceptance = false

isolate_attachments = false

}

attachment_policies {

rule_number = 100

condition_logic = "or"

conditions {

type = "tag-exists"

key = "domain"

}

action {

association_method = "tag"

tag_value_of_key = "domain"

}

}

segment_actions {

action = "create-route"

segment = "prod"

destination_cidr_blocks = [

"0.0.0.0/0"

]

destinations = [

module.ireland_inspection_vpc.core_network_attachment.id,

module.nvirginia_inspection_vpc.core_network_attachment.id,

module.sydney_inspection_vpc.core_network_attachment.id

]

}

segment_actions {

action = "create-route"

segment = "nonprod"

destination_cidr_blocks = [

"0.0.0.0/0"

]

destinations = [

module.ireland_inspection_vpc.core_network_attachment.id,

module.nvirginia_inspection_vpc.core_network_attachment.id,

module.sydney_inspection_vpc.core_network_attachment.id

]

}

segment_actions {

action = "share"

mode = "attachment-route"

segment = "prod"

share_with = ["inspection"]

}

segment_actions {

action = "share"

mode = "attachment-route"

segment = "nonprod"

share_with = ["inspection"]

}

}We have reduced our policy definition to 83 lines of code, and our attachment_policies block won’t change regardless of the number of segments we have. Let’s continue with our optimization.

Optimizing our Core Network policy – part II: edge-locations, segments, and segment-actions

The main goal to achieve today is having an immutable policy document, and avoid touching it anytime I add a new AWS Region, attachment, or segment. How we can achieve this? When I was discussing the use of the Terraform data source for the Core Network policy, I said that I like it due the simplicity when creating new blocks. I avoided – on purpose – other reason: I can use dynamic blocks to create as many block definitions as I want given a set of variables.

If you think about it, I need to define my global network somewhere: AWS Regions, VPCs to create, all the VPC information, routing domains, etc. The common places to do that is either by using variables (in a variables.tf file) or local variables (either in a locals.tf file or in between the main.tf file). The clear pattern is to have a Rosetta Stone of the network definition, so we can iterate over those values and create our dynamic blocks.

Let’s suppose that now we are adding a new AWS Region in our environment, and the following variables define the global network:

# AWS Regions

variable "aws_regions" {

type = map(string)

description = "AWS Regions to create the environment."

default = {

ireland = "eu-west-1"

nvirginia = "us-east-1"

sydney = "ap-southeast-2"

}

}

# Definition of the VPCs to create in Ireland Region

variable "ireland_spoke_vpcs" {

type = any

description = "Information about the VPCs to create in eu-west-1."

default = {

"prod" = {

name = "prod-eu-west-1"

segment = "prod"

cidr_block = "10.0.0.0/24"

}

"dev" = {

name = "dev-eu-west-1"

segment = "dev"

cidr_block = "10.0.1.0/24"

}

}

}

variable "ireland_inspection_vpc" {

type = any

description = "Information about the Inspection VPC to create in eu-west-1."

default = {

name = "inspection-eu-west-1"

cidr_block = "10.100.0.0/16"

}

}

# Definition of the VPCs to create in N. Virginia Region

variable "nvirginia_spoke_vpcs" {

type = any

description = "Information about the VPCs to create in us-east-1."

default = {

"prod" = {

name = "prod-us-east-1"

segment = "prod"

cidr_block = "10.10.0.0/24"

}

"dev" = {

name = "dev-us-east-1"

segment = "dev"

cidr_block = "10.10.1.0/24"

}

}

}

variable "nvirginia_inspection_vpc" {

type = any

description = "Information about the Inspection VPC to create in us-east-1."

default = {

name = "inspection-us-east-1"

cidr_block = "10.100.0.0/16"

}

}

# Definition of the VPCs to create in Sydney Region

variable "sydney_spoke_vpcs" {

type = any

description = "Information about the VPCs to create in ap-southeast-2."

default = {

"prod" = {

name = "prod-ap-southeast-2"

segment = "prod"

cidr_block = "10.20.0.0/24"

}

"dev" = {

name = "dev-ap-southeast-2"

segment = "dev"

cidr_block = "10.20.1.0/24"

}

}

}

variable "sydney_inspection_vpc" {

type = any

description = "Information about the Inspection VPC to create in ap-southeast-2."

default = {

name = "insp-ap-southeast-2"

cidr_block = "10.100.0.0/16"

}

}Starting with the AWS Regions, the definition of the dynamic block is easy: we iterate over the AWS Region codes and we generate one edge_locations block per Region.

locals {

region_codes = values({ for k, v in var.aws_regions : k => v })

}

...

dynamic "edge_locations" {

for_each = var.region_codes

iterator = region

content {

location = region.value

}

}Let’s now move to the segments definition. We don’t have a clear variable with this information, but we can work with the information we have to obtain this information. In our variable definition, you can see that when defining the Spoke VPCs information, one of the attributes is the segment of the specific VPC. Let’s scan all the Spoke VPC variables (in each Region) and obtain the list of routing domains – we need a new local variable to create this logic:

locals {

# List of routing domains

routing_domains = distinct(values(merge(

{ for k, v in var.ireland_spoke_vpcs : k => v.segment },

{ for k, v in var.nvirginia_spoke_vpcs : k => v.segment },

{ for k, v in var.sydney_spoke_vpcs : k => v.segment }

)))

}

...

# We create 1 inspection segment per AWS Region

dynamic "segments" {

for_each = local.routing_domains

iterator = domain

content {

name = domain.value

require_attachment_acceptance = false

isolate_attachments = false

}

}

# Inspection segment

segments {

name = "inspection"

require_attachment_acceptance = false

isolate_attachments = false

}Our local variable routing_domains merges all the Spoke VPC variables, only getting the segment of each VPC. We only keep the values of the map (as we don’t want the keys), and we use the distinct function to have unique values. Once we create this list, now we can iterate over it to create a dynamic set of segments blocks. You can see that the inspection segment is created outside – you can add it as well in the local variable if you want.

What if you require a different configuration per segment? For example, you want the nonprod segment to have isolated attachments, or have attachment acceptance for the prod segment. In this case, you can create a dedicated variable or local variable with the specific definition of each segment.

Are we done? Check the segment_actions blocks in the initial policy: we are creating the static routes pointing to our Inspection VPCs in each of the Spoke VPC segments. Therefore, anytime we have a new segment, we need to add a new block with this static route – again, not scalable. Let’s make use again of routing_domains:

# Static routes from prod and nonprod segments to Inspection VPCs

dynamic "segment_actions" {

for_each = local.routing_domains

iterator = domain

content {

action = "create-route"

segment = domain.value

destination_cidr_blocks = ["0.0.0.0/0"]

destinations = [

module.ireland_inspection_vpc.core_network_attachment.id,

module.nvirginia_inspection_vpc.core_network_attachment.id,

module.sydney_inspection_vpc.core_network_attachment.id

]

}

}Same procedure for the propagation of routes from the prod and nonprod segments to the inspection one:

# Propagation from prod and nonprod segments to inspection

dynamic "segment_actions" {

for_each = local.routing_domains

iterator = domain

content {

action = "share"

mode = "attachment-route"

segment = domain.value

share_with = ["inspection"]

}

}Let’s check all the changes all together:

locals {

# We get the list of AWS Region codes from var.aws_regions

region_codes = values({ for k, v in var.aws_regions : k => v })

# List of routing domains

routing_domains = distinct(values(merge(

{ for k, v in var.ireland_spoke_vpcs : k => v.segment },

{ for k, v in var.nvirginia_spoke_vpcs : k => v.segment },

{ for k, v in var.sydney_spoke_vpcs : k => v.segment }

)))

}

data "aws_networkmanager_core_network_policy_document" "core_nw_policy" {

core_network_configuration {

vpn_ecmp_support = false

asn_ranges = ["64520-65525"]

dynamic "edge_locations" {

for_each = var.region_codes

iterator = region

content {

location = region.value

}

}

}

# We create 1 inspection segment per AWS Region

dynamic "segments" {

for_each = local.routing_domains

iterator = domain

content {

name = domain.value

require_attachment_acceptance = false

isolate_attachments = false

}

}

# Inspection segment

segments {

name = "inspection"

require_attachment_acceptance = false

isolate_attachments = false

}

attachment_policies {

rule_number = 100

condition_logic = "or"

conditions {

type = "tag-exists"

key = "domain"

}

action {

association_method = "tag"

tag_value_of_key = "domain"

}

}

# Static routes from prod and nonprod segments to Inspection VPCs

dynamic "segment_actions" {

for_each = local.routing_domains

iterator = domain

content {

action = "create-route"

segment = domain.value

destination_cidr_blocks = ["0.0.0.0/0"]

destinations = [

module.ireland_inspection_vpc.core_network_attachment.id,

module.nvirginia_inspection_vpc.core_network_attachment.id,

module.sydney_inspection_vpc.core_network_attachment.id

]

}

}

# Propagation from prod and nonprod segments to inspection

dynamic "segment_actions" {

for_each = local.routing_domains

iterator = domain

content {

action = "share"

mode = "attachment-route"

segment = domain.value

share_with = ["inspection"]

}

}

}We have reduced our policy definition to 78 lines of code, and the policy will dynamically be updated anytime we expand our network in our variables or local variables definition. I would say this is enough, however let’s add something new in our network requirements: now we also have to inspect traffic between segments (East-West traffic).

The next (and don’t worry, last) section will discuss the changes needed (and how to optimize the policy definition) when having East-West traffic inspection in Cloud WAN.

Optimizing our Core Network policy – part III: East-West traffic inspection

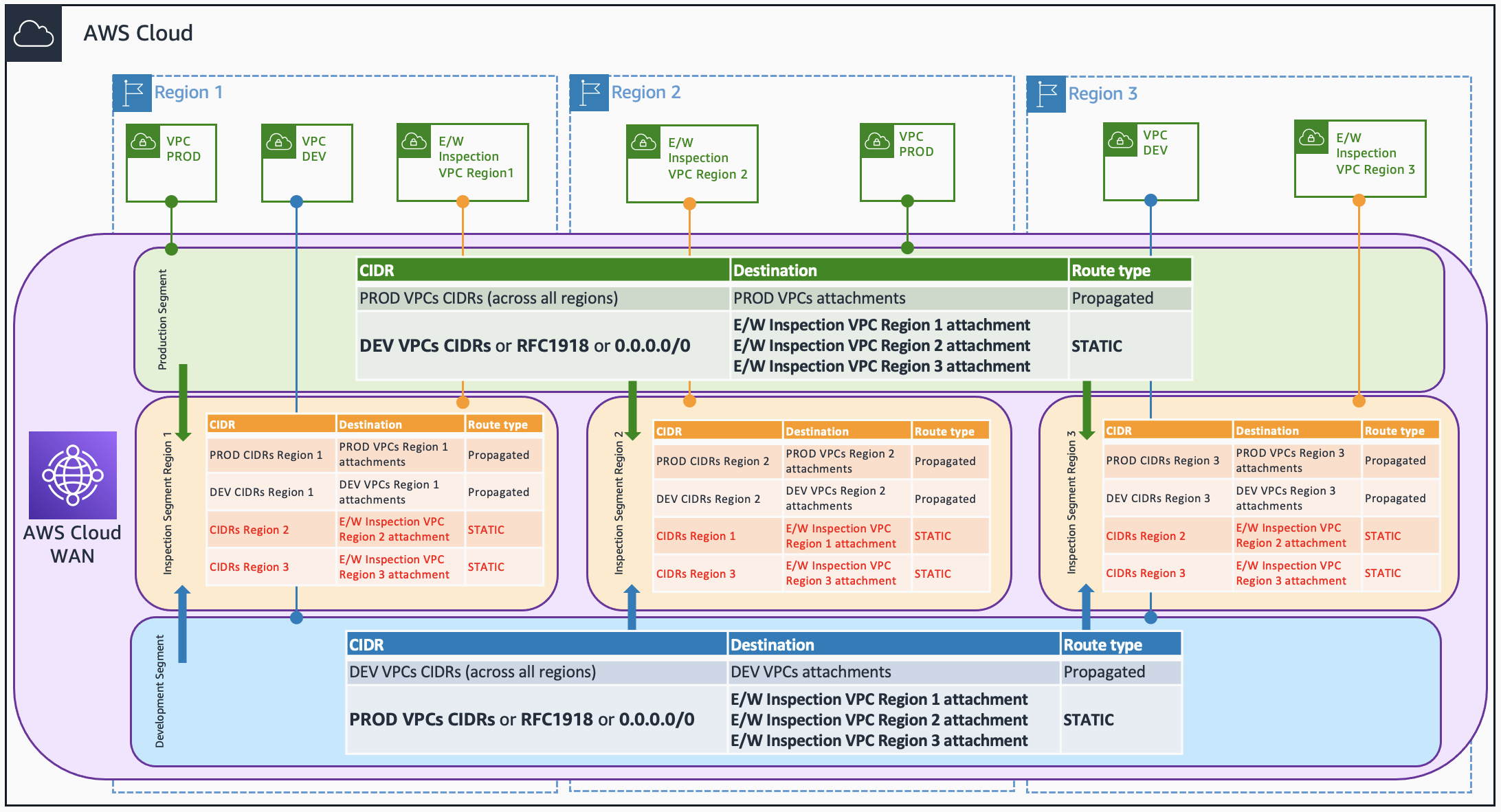

The last iteration of our Core Network policy looks quite nice to be honest – if I can stop being humble for a moment. However, the initial use case we chose does not cover one common requirement I usually discuss with customers: traffic should be inspected between segments (East-West traffic). We can also define East-West traffic as traffic inspection within segments, but let’s focus on the first use case. Now our new design looks like the image below:

First of all, we need to create one inspection segment per AWS Region. Note that, for simplicity in the diagram, the segment looks like it is created in the AWS Region of where the Inspection VPC is associated. However, each inspection segment has to be created in all the AWS Regions. How this is going to look like in our Terraform code? Well, we can use the keys of the aws_regions variable map to create unique names for our inspection segments.

locals {

region_names = keys({ for k, v in var.aws_regions : k => v })

}

...

# We create 1 inspection segment per AWS Region

dynamic "segments" {

for_each = local.region_names

iterator = region

content {

name = "inspection${region.value}"

require_attachment_acceptance = false

isolate_attachments = false

}

}Second thing we need to do is to create static routes: with destination the CIDR blocks of the other AWS Regions VPCs and each “local” Inspection VPCs as next hop. Why do we have to do it? Well, to avoid asymmetric traffic in our firewalls, we need to inspect twice any inter-Region traffic.

Can we use summarization to simplify this static route configuration? In our design (image above) we cannot, as we are propagating prod and nonprod segments, those VPCs will be propagated with more specific routes and that’s why we need a static route using the same CIDR to enforce the routing to the Inspection VPCs.

And yeah, the first time I was building the architecture I was thinking: “wow, this is not scalable at all for hundreds of VPCs! What about adding new Regions?”. But you see where this is going, we can optimize the creation of these blocks following the same strategies we followed in the previous sections:

- First of all, we need to create a local variable with some information: the Spoke VPC CIDR blocks of each AWS Region, plus the Inspection VPC attachment ID. The reason to create this local variable is easy, we have to create static routes at scale (and dynamically), so we need to iterate over something – check in the Core Network policy documentation which values are needed when creating static routes.

locals {

# Information about the CIDR blocks and Inspection VPC attachments of each AWS Region

region_information = {

ireland = {

cidr_blocks = values({ for k, v in var.ireland_spoke_vpcs : k => v.cidr_block })

inspection_vpc_attachment = module.ireland_inspection_vpc.core_network_attachment.id

}

nvirginia = {

cidr_blocks = values({ for k, v in var.nvirginia_spoke_vpcs : k => v.cidr_block })

inspection_vpc_attachment = module.nvirginia_inspection_vpc.core_network_attachment.id

}

sydney = {

cidr_blocks = values({ for k, v in var.sydney_spoke_vpcs : k => v.cidr_block })

inspection_vpc_attachment = module.sydney_inspection_vpc.core_network_attachment.id

}

}

} - Next thing is to determine how many static route blocks we need for the inter-Region inspection traffic. The combination is quite straightforward: we have to create a static route pointing to as many AWS Regions there are (except the local Region). I’m sure there are several ways to create this combination, in my case I decided to create a map with two values (inspection and destination), that are going to be used to pick the specific values of the local variable created before (

region_information).

locals {

# We create a list of maps with the following format:

# - inspection --> inspection segment to create the static routes

# - destination --> destination AWS Region, to add the destination CIDRs + Inspection VPC of that Region

region_combination = flatten(

[for region1 in local.region_names :

[for region2 in local.region_names :

{

inspection = region1

destination = region2

}

if region1 != region2

]

]

)

} With these two local variables, now we can iterate to create as many static route blocks as we need for the inter-Region traffic inspection:

# Create of static routes - per AWS Region, we need to point those VPCs CIDRs to pass through the local Inspection VPC in the other inspection segments

# For example, N. Virginia CIDRs to Inspection VPC in N.Virginia --> inspectionireland & inspectionsydney

dynamic "segment_actions" {

for_each = local.region_combination

iterator = combination

content {

action = "create-route"

segment = "inspection${combination.value.inspection}"

destination_cidr_blocks = local.region_information[combination.value.destination].cidr_blocks

destinations = [local.region_information[combination.value.destination].inspection_vpc_attachment]

}

}How to manage the inclusion of new VPCs or new AWS Regions? With new VPCs, if you keep this information in the same place where you have your current VPCs, the update will be automatic in the next apply. For new AWS Regions, you will need to update the region_information local variable with the new VPC CIDRs and the Inspection VPC attachment ID – however, the static routes to create – from the region_combination local variable – will be updated automatically.

Let’s check the last iteration of our Core Network policy document:

locals {

# We get the list of AWS Region codes from var.aws_regions

region_codes = values({ for k, v in var.aws_regions : k => v })

# We get the list of AWS Region names from var.aws_regions

region_names = keys({ for k, v in var.aws_regions : k => v })

# List of routing domains

routing_domains = distinct(values(merge(

{ for k, v in var.ireland_spoke_vpcs : k => v.segment },

{ for k, v in var.nvirginia_spoke_vpcs : k => v.segment },

{ for k, v in var.sydney_spoke_vpcs : k => v.segment }

)))

# Information about the CIDR blocks and Inspection VPC attachments of each AWS Region

region_information = {

ireland = {

cidr_blocks = values({ for k, v in var.ireland_spoke_vpcs : k => v.cidr_block })

inspection_vpc_attachment = module.ireland_inspection_vpc.core_network_attachment.id

}

nvirginia = {

cidr_blocks = values({ for k, v in var.nvirginia_spoke_vpcs : k => v.cidr_block })

inspection_vpc_attachment = module.nvirginia_inspection_vpc.core_network_attachment.id

}

sydney = {

cidr_blocks = values({ for k, v in var.sydney_spoke_vpcs : k => v.cidr_block })

inspection_vpc_attachment = module.sydney_inspection_vpc.core_network_attachment.id

}

}

# We create a list of maps with the following format:

# - inspection --> inspection segment to create the static routes

# - destination --> destination AWS Region

region_combination = flatten(

[for region1 in local.region_names :

[for region2 in local.region_names :

{

inspection = region1

destination = region2

}

if region1 != region2

]

]

)

}

# AWS Cloud WAN Core Network Policy

data "aws_networkmanager_core_network_policy_document" "core_network_policy" {

core_network_configuration {

vpn_ecmp_support = false

asn_ranges = ["64520-65525"]

dynamic "edge_locations" {

for_each = local.region_codes

iterator = region

content {

location = region.value

}

}

}

# We create 1 segment per routing domain

dynamic "segments" {

for_each = local.routing_domains

iterator = domain

content {

name = domain.value

require_attachment_acceptance = false

isolate_attachments = false

}

}

# We create 1 inspection segment per AWS Region

dynamic "segments" {

for_each = local.region_names

iterator = region

content {

name = "inspection${region.value}"

require_attachment_acceptance = false

isolate_attachments = false

}

}

attachment_policies {

rule_number = 100

condition_logic = "or"

conditions {

type = "tag-exists"

key = "domain"

}

action {

association_method = "tag"

tag_value_of_key = "domain"

}

}

# We share the routing_domain segment (prod, dev, etc.) routes with the inspection segments

dynamic "segment_actions" {

for_each = local.routing_domains

iterator = domain

content {

action = "share"

mode = "attachment-route"

segment = domain.value

share_with = [for r in local.region_names : "inspection${r}"]

}

}

# Static routes from dev and prod segments to Inspection VPCs

dynamic "segment_actions" {

for_each = local.routing_domains

iterator = domain

content {

action = "create-route"

segment = domain.value

destination_cidr_blocks = ["0.0.0.0/0"]

destinations = values({ for k, v in local.region_information : k => v.inspection_vpc_attachment })

}

}

# Create of static routes - per AWS Region, we need to point those VPCs CIDRs to pass through the local Inspection VPC in the other inspection segments

# For example, N. Virginia CIDRs to Inspection VPC in N.Virginia --> inspectionireland & inspectionsydney

dynamic "segment_actions" {

for_each = local.region_combination

iterator = combination

content {

action = "create-route"

segment = "inspection${combination.value.inspection}"

destination_cidr_blocks = local.region_information[combination.value.destination].cidr_blocks

destinations = [local.region_information[combination.value.destination].inspection_vpc_attachment]

}

}

}We have the definition of our Core Network policy ready, which updates your global network dynamically anytime we add new VPCs or AWS Regions. And everything in 93 lines of code. That’s it, the only thing we need to worry about now is to manage our variables and/or local variables where we are centralizing our global network definition, and the Core Network policy will be updated anytime we apply again our infrastructure definition.

You can find these examples (and more) of traffic inspection patterns with AWS Cloud WAN in this aws-samples repository.

Last thing: First post, a lot of complex topics (I feel you). Don’t worry, in future posts we will dive deep in specific aspects of managing AWS Networking infrastructure using Terraform (modules, dynamic blocks, or loops). Indicate in the comment those topics you find interesting and what to know more, and we will make our best to prioritize them.

Have fun building in AWS!

Leave a Reply